Reinforcement Learning Penguins (Part 4/4) | Unity ML-Agents

Training and Inference

Before you can train your penguins, you will need to set up a configuration file.

Trainer Config YAML

In this section, you’ll add configuration info that the ML-Agents training program will use. Some of these are hyperparameters — a term that may be familiar to you if you’ve done some deep learning in the past — and others are settings specific to ML-Agents. While optional for this tutorial, you may want to explore these parameters to strengthen your understanding.

Inside the ml-agents directory you downloaded from GitHub, there is a config directory. Inside that, you will find a ppo directory that contains a lot of yaml files. We need to create a new one for our penguin. For consistency, we'll call it Penguin.yaml, but you could call it something else (for example, PenguinVisual.yaml, if you wanted to try visual observations but keep the original config file). It also doesn't need to be in this folder, so if you'd rather put it somewhere else (like a GitHub repo), go for it.

- Create and open a new file: config\Penguin.yaml

- Add the following lines to the file:

behaviors:

Penguin:

trainer_type: ppo

hyperparameters:

batch_size: 128

buffer_size: 2048

learning_rate: 0.0003

beta: 0.01

epsilon: 0.2

lambd: 0.95

num_epoch: 3

learning_rate_schedule: linear

network_settings:

normalize: false

hidden_units: 256

num_layers: 2

vis_encode_type: simple

reward_signals:

extrinsic:

gamma: 0.99

strength: 1.0

keep_checkpoints: 5

max_steps: 1000000

time_horizon: 128

summary_freq: 5000

threaded: trueNote: In this case, the word "Penguin" in the yaml file needs to match the Behavior Name you set inside Unity in the penguin's Behavior Parameters. If these don't match, your agent will not train correctly.

These configuration settings define how training will proceed. This is a fairly intricate topic, so we're going to skip over it for this tutorial. If you would like to know more about these parameters, read the Training Configuration File documentation, which does a great job explaining each one.

Curriculum (Deprecated)

Just a quick note here... this tutorial used to rely on Curriculum Learning, which made the feeding task easier at the start and gradually get more difficult. While updating this tutorial, I experimented with cutting out curriculum and the penguins still trained reliably and only little bit slower. Curriculum is a lot more complex (though also more powerful) than it used to be, so I'm removing it from this tutorial. We'll probably do another curriculum tutorial on the Immersive Limit YouTube channel soon.

Training with Python

In this section, you’ll train the penguin agents using a Python program called mlagents-learn. You will need to install Anaconda and then install the ML-Agents python libraries, as detailed in the Anaconda Setup tutorial. This program is part of the ML-Agents project and makes training much easier than writing your own training code from scratch.

It is important that the version of your training code matches the version in your Unity project. If you updated one, but not the other, training will fail.

- Open Anaconda prompt.

- Activate your ml-agents environment.

- Navigate to the ml-agents directory you downloaded and unzipped from GitHub.

- Type in the following command:

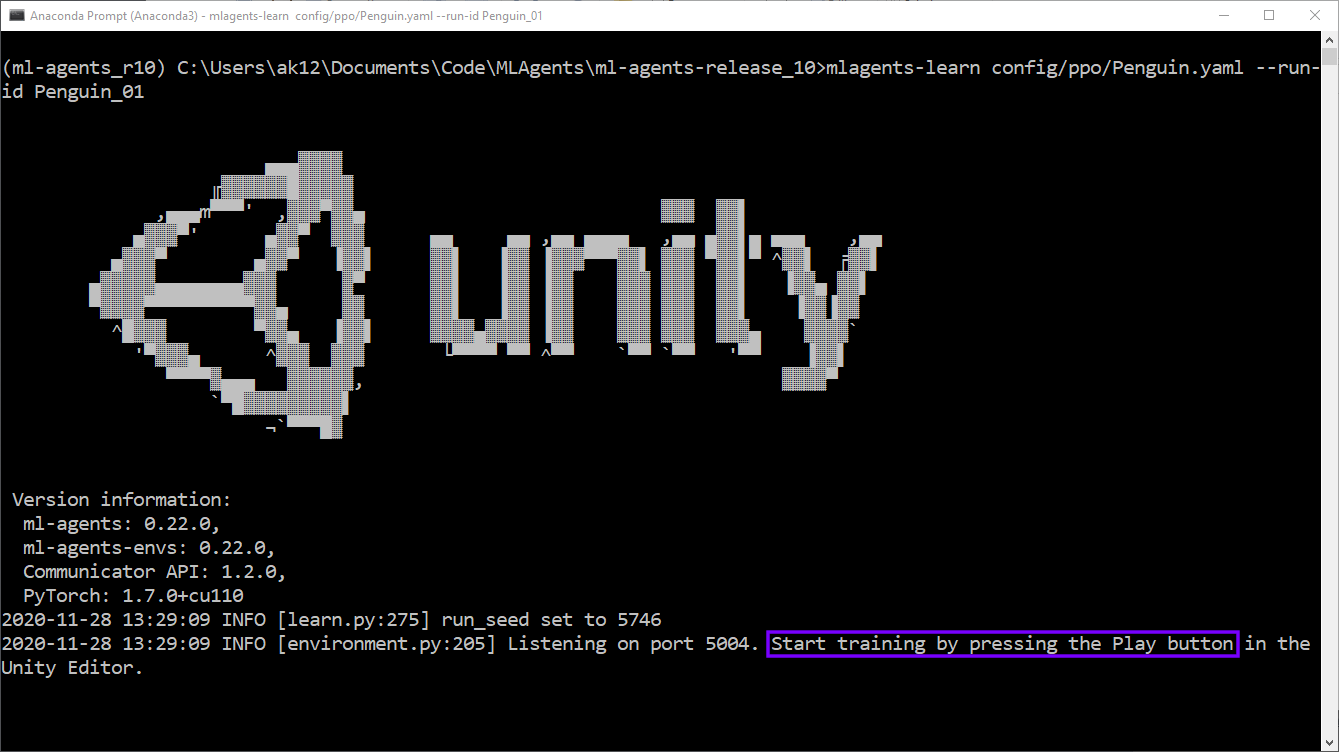

mlagents-learn config/ppo/Penguin.yaml --run-id Penguin_01Here’s a breakdown of the different parts of the command:

mlagents-learn: The Python program that runs training

config/ppo/Penguin.yaml: A relative path to the configuration file (this can also be a direct path)

--run-id Penguin_01: A unique name we choose to give this round of training (you can make this whatever you want)

- Run the command.

- When prompted, press Play in the Unity Editor to start training (Figure 01).

Figure 01: Anaconda prompt window: mlagents-learn is waiting for you to press the Play button

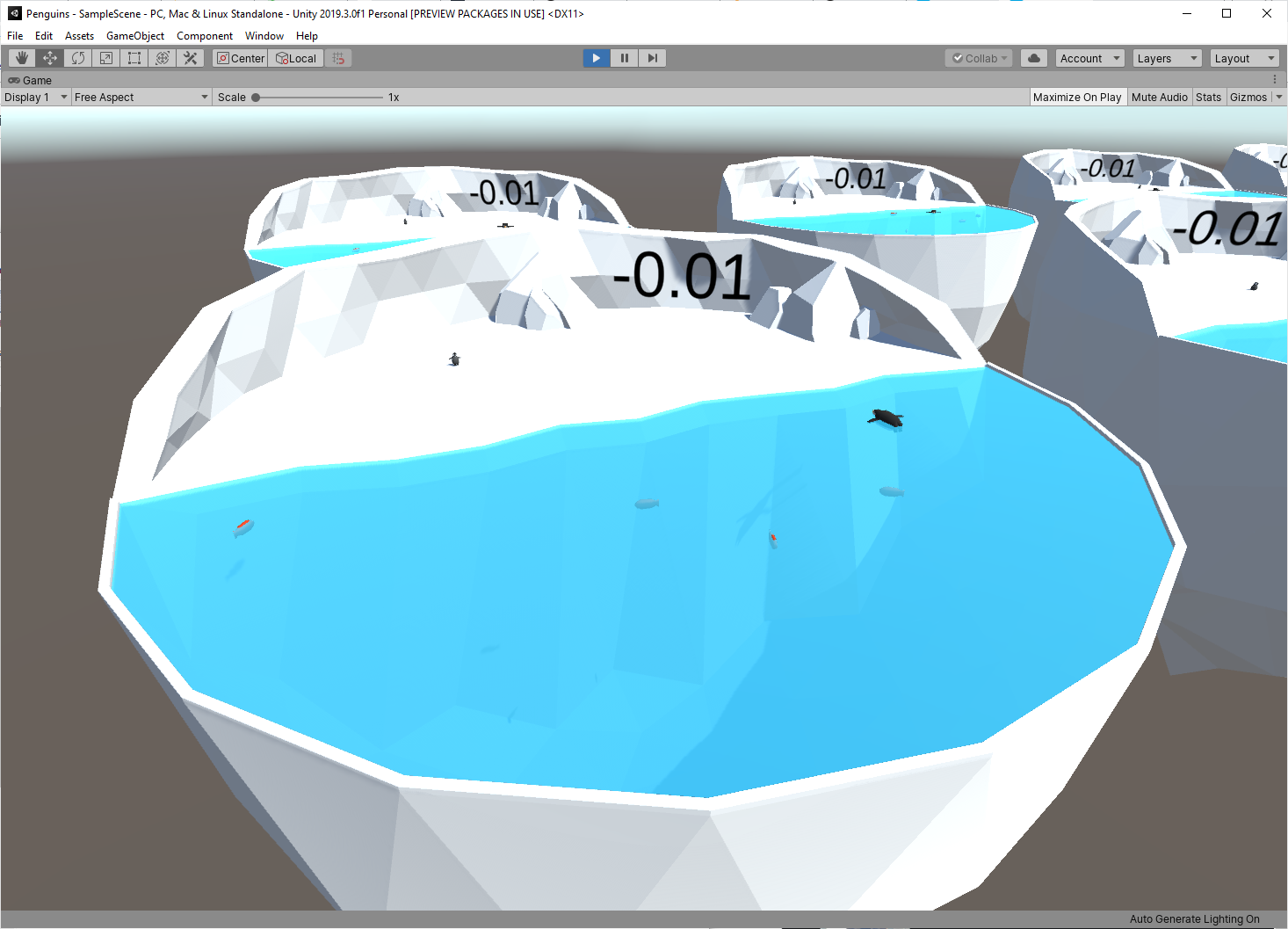

The penguins will be moving very fast and the frame rate might be choppy because it’s running at 20x speed.

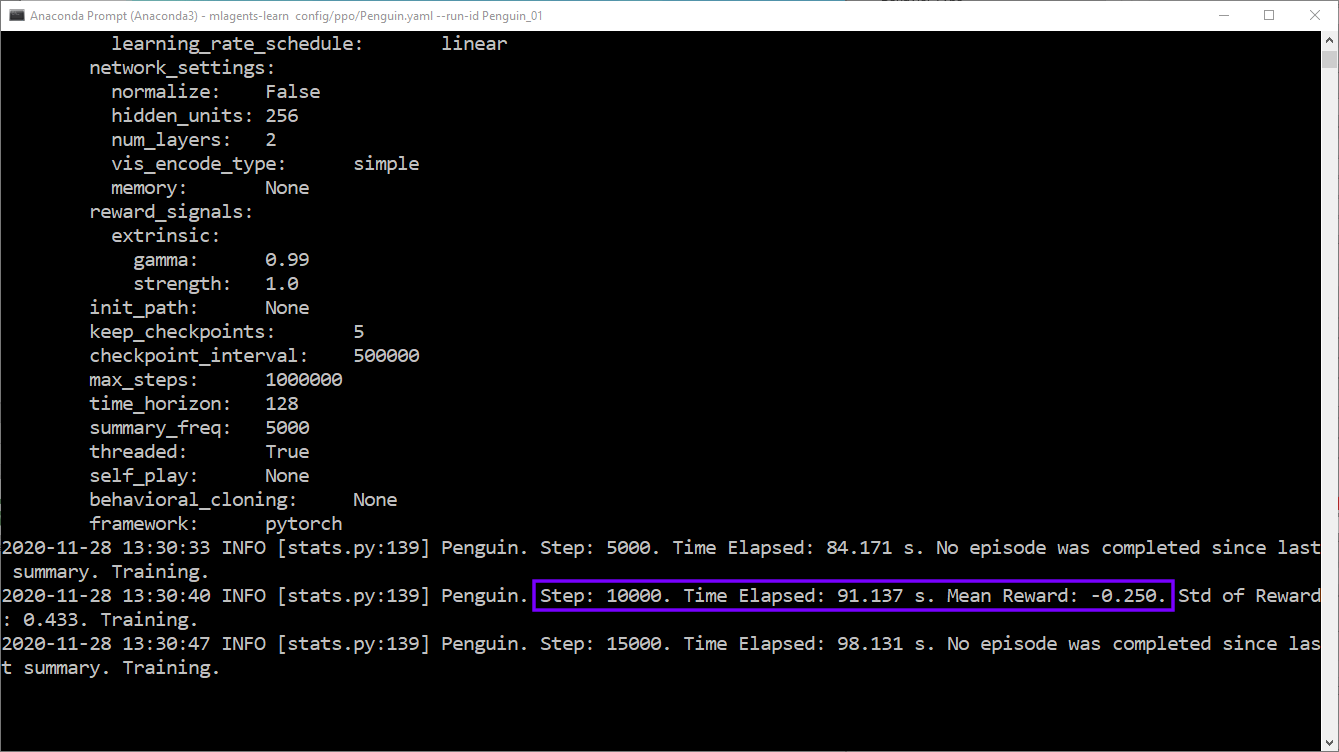

Figure 02: Training started

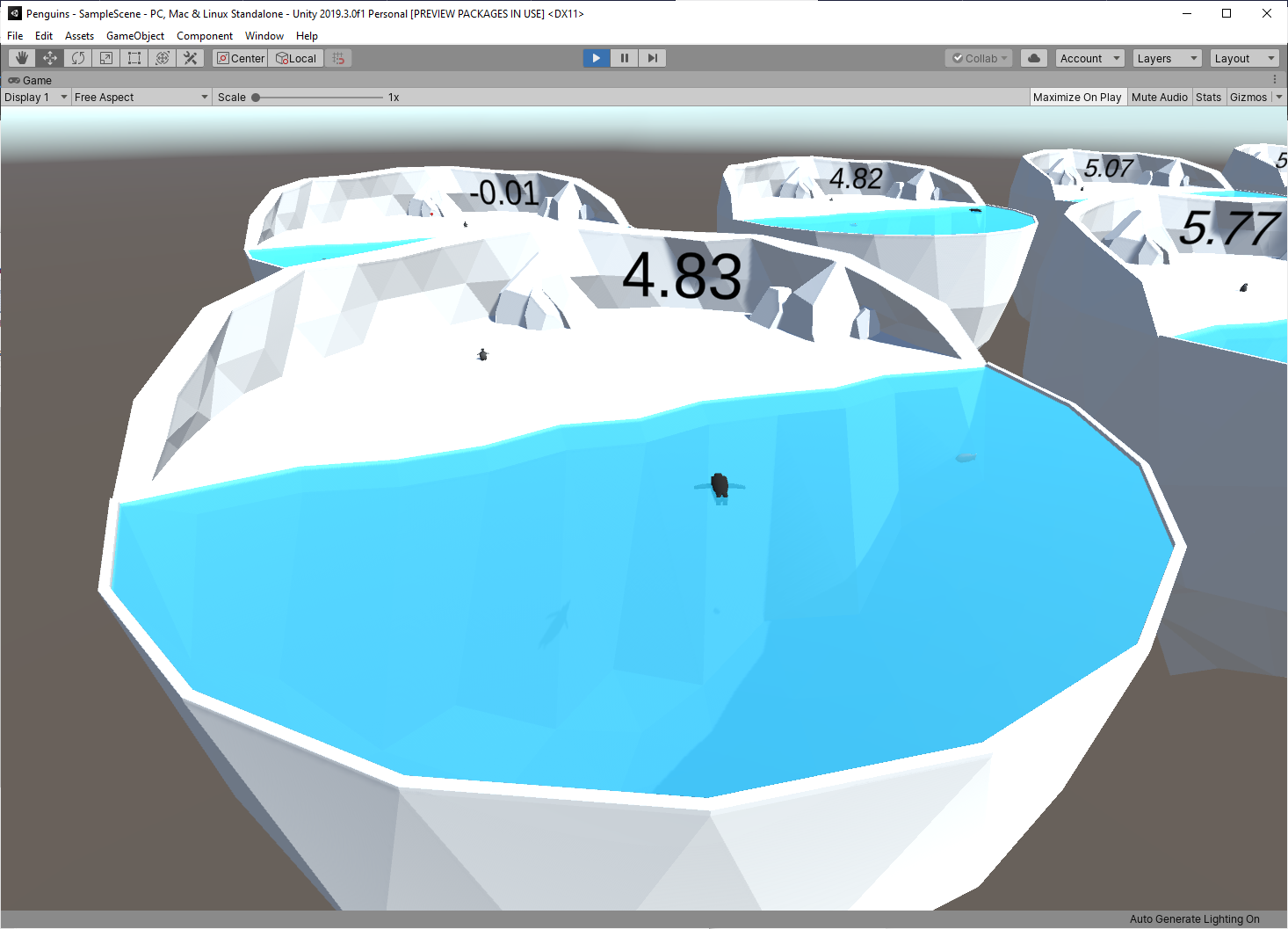

As the training proceeds, you will get periodic updates (Figure 03). Each of these will include:

- Step: The number of timesteps that have elapsed

- Time Elapsed: How much time the training has been running (in real-world time)

- Mean Reward: The average reward (since the last update)

- Std of Reward: The standard deviation of the reward (since the last update)

Figure 03: Anaconda prompt window: periodic training updates

Eventually, your penguins should get very good at catching fish. The Mean Reward will stop increasing (around a value of 7.5 points) when the penguins can't pick up fish any faster. At this point, you can stop training early by pressing the Play button in the Editor. If you let it go, it will keep training until it reaches "max_steps: 1000000" as specified in the yaml config file.

The training will export a Penguin.onnx file that represents a trained neural network for your penguin (Figure 04).

Note: This neural network will only work for your current penguins. If you change the observations in the CollectObservations() function in the PenguinAgent script or RayPerceptionSensorComponent3D, the neural network will not work. The outputs of the neural network will correspond to the actionBuffers parameter of the OnActionReceived() function in the PenguinAgent script.

Figure 04: Anaconda prompt window: exported .onnx file path

Inference

In this section, you’ll set up the penguins to perform inference. This will cause your penguins to make decisions using the neural network you trained in the previous section. Once you’ve set up the neural network in your project, you no longer need Python for intelligent decision making.

- Create a new folder in your Unity project called NNModels inside Assets\Penguin.

- Find the .onnx file that was exported at the end of training.

- In this case, it’s results\Penguin_01\Penguin.onnx

- Drag the .onnx file into the NNModels folder in Unity (Figure 06).

Figure 05: Drag PenguinLearning.onnx into the NNModels folder in Unity

- Open the Penguin Prefab by double-clicking it in the Project tab.

- Drag the PenguinLearning nn model into the Model field of the Behavior Parameters component (Figure 07).

Figure 06: Drag the PenguinLearning nn model into the Model field of BehaviorParameters on the Penguin

- Go back to the main Scene and press Play.

Figure 07: Penguins collecting fish using neural networks to make decisions

The penguins should start catching fish and bringing them to their babies! This is called “inference,” and it means that the neural network is making the decisions. Our agent is giving it observations and reacting to the decisions by taking action.

These .onnx files will be included with your game when you build it. They work across all of Unity’s build platforms. If you want to use a neural network in a mobile or console game, you can — no extra installation will be required for your users.

Conclusion

You should now have a fully trained ML-Agent penguin that can effectively catch swimming fish and feed them to its baby. Next you should apply what you’ve learned to your own project. Don’t get discouraged if training doesn’t work right away — even this small example required many hours of experimentation to work reliably.

Tutorial Parts

Reinforcement Learning Penguins (Part 1/4)

Reinforcement Learning Penguins (Part 2/4)

Reinforcement Learning Penguins (Part 3/4)

Reinforcement Learning Penguins (Part 4/4)