3D Scanned Objects in Synthetic Image Datasets

A synthetic image I made with a photo background and a 3d scanned foreground.

The Idea

I've had a cool idea kicking around in my head for a while now and it's about time I wrote about it and started working on it.

The idea: Use 3d scans of real world objects to create synthetic image datasets for deep learning.

A little background

Last year (June 2018), I made a synthetic image dataset and used it to train a neural net to detect cigarette butts. Rather than take the usual approach and hand-label thousands of images, I decided to try something different. I figured that since cigarette butts look very similar to each other, I could take pictures of them, remove the background, and paste those foregrounds randomly over top of different backgrounds (as long as those backgrounds didn't have any instances of the object of interest in them). Turns out it works really well I had great success with cigarette butts and weeds.

Assuming the objects you want to detect are fairly similar, the only real problem with this approach is that it's still a pain in the ass to manually cut out foregrounds. Sure, you are saving hundreds of hours, but it still takes hours of manual work to create the dataset.

3d scans as an alternative

In my mind the next logical progression of this idea is to use photogrammetry, aka 3d scanned objects. 3d scanning technology has improved quite a bit over the past few years and I've been blown away by some of the examples I've seen on Sketchfab. I knew it would only be a matter of time before this tech showed up on a phone and sure enough, the Galaxy Note 10 was just announced a few days ago along with an impressive 3d scanner! I'd be shocked if the iPhone doesn't follow suit and I think it's safe to bet that HoloLens 2 will have an amazing scanner built in as well.

My plan

So since scanning is only going to get easier, I might as well get out in front of this and try it. Here's what I'm going to do.

Use the free photogrammetry software, Meshroom, plus photos from my iPhone to generate a 3d scan.

Use Blender to clean up the model.

Render the 3d model from a lot of different angles using either Blender or Unity.

Generate foregrounds with transparent backgrounds so that I can feed them into my cocosynth pipeline, just like I would for manually cut foregrounds

Superimpose them over various 2d image backgrounds to create thousands of unique images, labeled automatically.

Use the synthetic dataset to train a neural net to detect the object in real images

I'm using this YouTube tutorial to learn Meshroom and how to clean up the model in Blender: Photogrammetry in Meshroom & Blender

Initial experiment

My photogrammetry lightbox setup

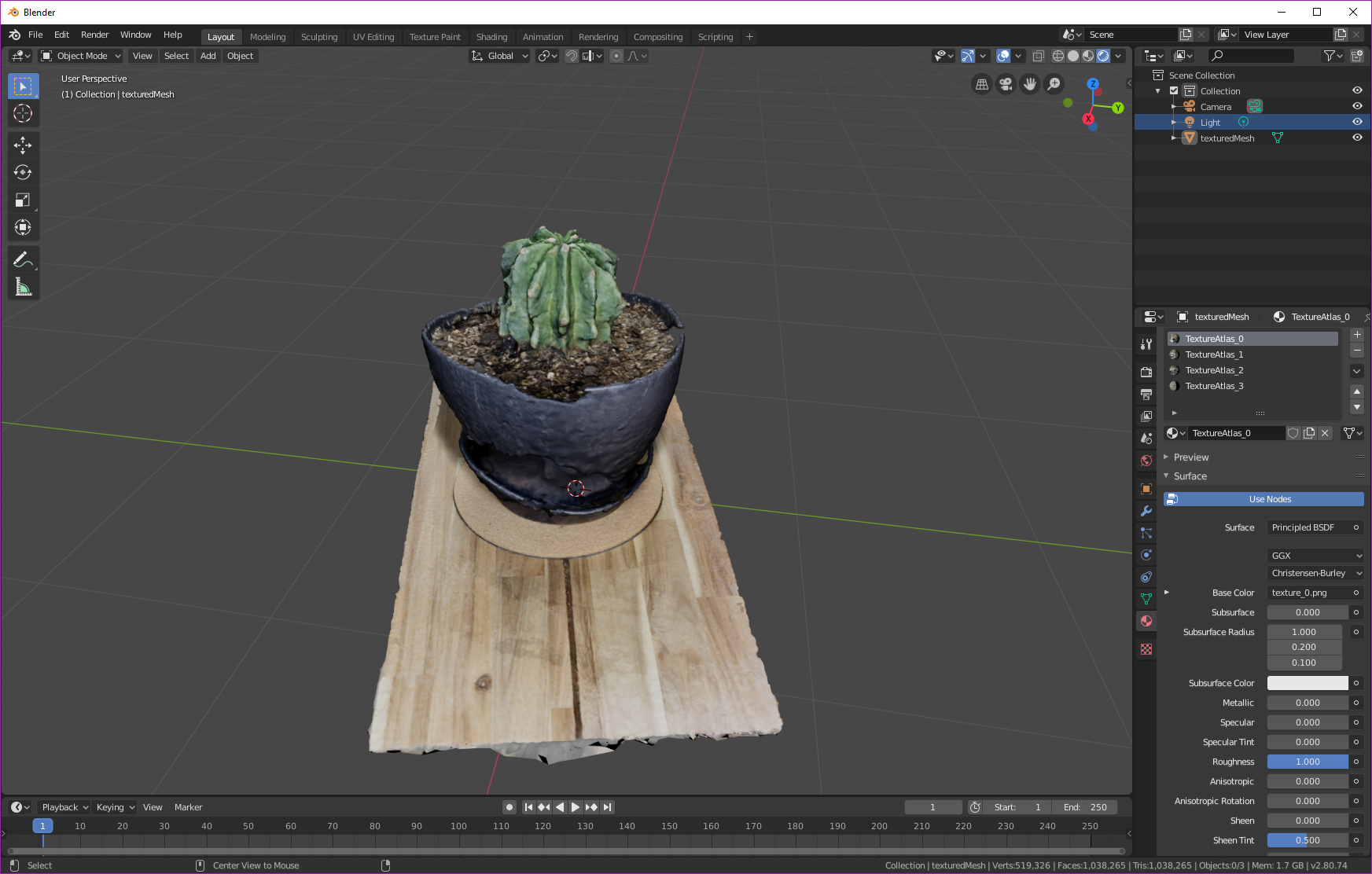

I’ve done one initial experiment and the result admittedly isn't very impressive. I took 51 photos of a cactus lit by the lightboxes I use for YouTube videos. I processed the mesh with Meshroom while writing this blog post (probably took about 1 hour). The black pot didn't turn out well and the cactus seems a bit rough, but it's a decent starting point to experiment from.

The 3d mesh, generated by Meshroom and imported into Blender for cleanup and rendering.

I'm not too concerned for a couple reasons. First, 3d scanning is certainly going to improve. Second, other people are clearly getting much better results with Meshroom than I am based on what I've seen on Sketchfab, so I clearly just need to figure out what they did differently.

Ganesh, scanned by AliceVision (creator of Meshroom)

I'll post updates as I make progress…